I am glad to announce, firstly, the release of whitepixel, an open source GPU-accelerated password hash auditing software for AMD/ATI graphics cards that qualifies as the world's fastest single-hash MD5 brute forcer; and secondly, that a Linux computer built with four dual-GPU AMD Radeon HD 5970 graphics cards for the purpose of running whitepixel is the first demonstration of eight AMD GPUs concurrently running this type of cryptographic workload on a single system. This software and hardware combination achieves a rate of 28.6 billion MD5 password hashes tested per second, consumes 1230 Watt at full load, and costs 2700 USD as of December 2010. The capital and operating costs of such a system are only a small fraction of running the same workload on Amazon EC2 GPU instances, as I will detail in this post.

Software: whitepixel

Currently, whitepixel supports attacking MD5 password hashes only, but more hash types will come soon. What prompted me to write it was that sometime in 2010, ATI Catalyst drivers started supporting up to 8 GPUs (on Linux at least) when previously they were limited to 4, which made it very exciting to be able to play with this amount of raw computing performance, especially given that AMD GPUs are roughly 2x-3x faster than Nvidia GPUs on ALU-bound workloads. Also, I had previously worked on

MD5 chosen-prefix collisions on AMD/ATI GPUs. I had a decent MD5 implementation, wanted to optimize it further, and put it to other uses.

Overview of whitepixel

- It is the fastest of all single-hash brute forcing tools: ighashgpu, BarsWF,oclHashcat, Cryptohaze Multiforcer, InsidePro Extreme GPU Bruteforcer,ElcomSoft Lightning Hash Cracker, ElcomSoft Distributed Password Recovery.

- Targets AMD HD 5000 series and above GPUs, which are roughly 2x-3x faster than high-end Nvidia GPUs on ALU-bound workloads.

- Best AMD multi-GPU support. Works on at least 8 GPUs. Whitepixel is built directly on top of CAL (Compute Abstract Layer) on Linux. Other brute forcers support fewer AMD GPUs due to OpenCL libraries or Windows platform/drivers limitations.

- Hand-optimized AMD IL (Intermediate Language) MD5 implementation.

- Leverages the bitalign instruction to implement rotate operations in 1 clock cycle.

- MD5 step reversing. The last few of the 64 steps are pre-computed in reverse so that the brute forcing loop only needs to execute 46 of them to evaluate potential password matches, which speeds it up by 1.39x.

- Linux support only.

- Last but not least, it is the only performant open source brute forcer for AMD GPUs. The author of BarsWF recently open sourced his code but as shown in the graphs below it is about 4 times slower.

That said, speed is not everything. Whitepixel is currently very early-stage software and lacks features such as cracking multiple hashes concurrently, charset selection, and attacking hash algorithms other than MD5.

To compile and test whitepixel, install the

ATI Catalyst Display drivers (I have heavily tested 10.11), install the latest

ATI Stream SDK (2.2 as of December 2010), adjust the include path in the Makefile, build with "make", and start cracking with "./whitepixel $HASH". Performance-wise, whitepixel scales linearly with the number of GPUs and the

number of ALUs times the frequency clock (as documented in this

handy reference from the author of ighashgpu).

Performance

The first chart compares single-hash MD5 brute forcers on the fastest single GPU they support:

- whitepixel 1: HD 5870: 4200 Mhash/sec

- ighashgpu 0.90.17.3 with "-t:md5 -c:a -min:8 -max:8": HD 5870: 3690 Mhash/sec, GTX 580: 2150 Mhash/sec (estimated)

- oclHashCat 0.23 with "-n 160 --gpu-loops 1024 -m 0 '?l?l?l?l' '?l?l?l?l'": HD 5870: 2740 Mhash/sec, GTX 580: 1340 Mhash/sec (estimated)

- BarsWF CUDA v0.B or AMD Brook 0.9b with "-c 0aA~ -min_len 8:": GTX 580: 1740 Mhash/sec (estimated), HD 5870: 1240 Mhash/sec

The second chart compares single-hash MD5 brute forcers running on as many of the fastest GPUs they each support. Note that 8 x HD 5870 has not been tested with any of the tools because it is unknown if this configuration is supported:

- whitepixel 1: 4xHD 5970: 28630 Mhash/sec

- ighashgpu 0.90.17.3 with "-t:md5 -c:a -min:8 -max:8": 8xGTX 580: 17200 Mhash/sec (estimated), 2xHD 5970: 12600 Mhash/sec

- BarsWF CUDA v0.B or AMD Brook 0.9b with "-c 0aA~ -min_len 8:": 8xGTX 580: 13920 Mhash/sec (estimated)

- oclHashCat 0.23 with "-n 160 --gpu-loops 1024 -m 0 '?l?l?l?l' '?l?l?l?l'": 8xGTX 580: 10720 Mhash/sec (estimated)

Hardware: 4 x Dual-GPU HD 5970

To demonstrate and use whitepixel, I built a computer supporting four of the currently fastest graphics card: four dual-GPU AMD Radeon HD 5970. One of my goals was to keep the cost as low as low as possible without sacrificing system reliability. Some basic electrical and mechanical engineering were necessary to reach this goal. The key design points were:

- Modified flexible PCIe extenders: allows down-plugging PCIe x16 video cards into x1 connectors, enables running off an inexpensive motherboard, gives freedom to arrange the cards to optimize airflow and cooling (max GPU temps 85-90 C at 25 C ambient temp).

- Two server-class 560 Watt power supplies instead of one high-end desktop one: increases reliability, 80 PLUS Silver certification increases power efficiency (measured 88% efficiency).

- Custom power cables to the video cards: avoids reliance on Y-cable splitters and numerous cable adapters which would ultimately increase voltage drop and decrease system reliability.

- Rackable server chassis: high density of 8 GPUs in 3 rack units.

- Entry-level Core 2 Duo processor, 2GB RAM, onboard LAN, diskless: low system system cost, gives ability to boot from LAN.

- Undersized ATX motherboard: allows placing all the components in a rackable chassis.

- OS: Ubuntu Linux 8.04 64-bit.

Detailed list of parts:

Note: I could have built it with a less expensive and low-power processor instead, and saved about $100, but I had that spare Core 2 Duo and used it instead.

The key design points are explained in great details in the coming sections.

Down-plugging x16 Cards in x1 Connectors

It is not very well known, but the PCIe specification electrically supports down-plugging a card in a smaller link connector, such as an x16 card in an x1 connector. This is possible because the link width is dynamically detected during link initialization and training. However PCIe mechanically prevents down-plugging by closing the end of the connector, for a reason that I do not understand. Fortunately this can be worked around by cutting the end of the PCIe connector, which I did by using a knife to tediously carve the plastic. But another obstacle to down-plugging especially long cards into x1 connectors is that motherboards have tall components such as heatsinks or small fans that might come in contact with the card. For these reasons, I bought flexible PCIe x1 extenders in order to allow placing the cards anywhere in the vicinity of the motherboard, and to cut their connectors instead of the motherboard's (easier and less risky). Here are a few links to manufacturers of x1 extenders:

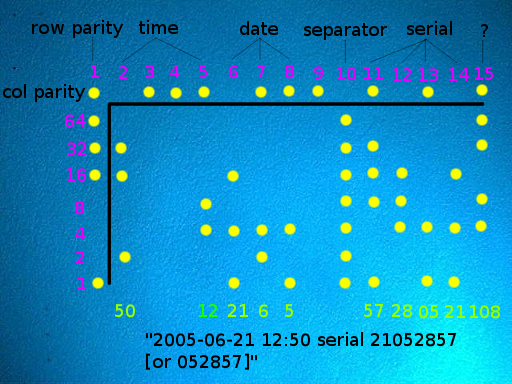

Shorting Pins for "Presence Detection"

- A1: PRSNT1# Hot-plug presence detect

- B17: PRSNT2# Hot-plug presence detect (for x1 cards)

- B31: PRSNT2# Hot-plug presence detect (for x4 cards)

- B48: PRSNT2# Hot-plug presence detect (for x8 cards)

- B81: PRSNT2# Hot-plug presence detect (for x16 cards)

The motherboard connects PRSNT1# to ground. PCIe cards must have an electrical trace connecting PRSNT1# to the corresponding PRSNT2# pin depending on their link width. The motherboard detects if a card is present if it detects ground on one of the PRSNT2# pins. It is unclear to me whether this presence detection mechanism is supposed to be used only in the context of hot-plugging (yes, PCIe supports hot-plugging), or to detect the presence of cards in general (eg. during POST). One thing I have experimentally verified is that some, not all, motherboards use this mechanism to detect the presence of cards during POST. Down-plugging an x16 card in an x1 connector on these motherboards results in a system that does not boot or does not detect the card. The solution is to simply short pins A1 and B17 to do exactly what a real x1 card does:

I had a few motherboads that did not allow down-plugging. With this solution all of them worked fine. Now this meant I could build my system using less expensive motherboards with 4 x1 connectors for example, instead of requiring 4 x16 connectors. A reduced width only means reduced bandwidth. However even an x1 PCIe 1.0 link allows for 250 MB/s of bandwidth, which is far above my password cracking needs (the main kernel of whitepixel sends and receives data on the order of hundreds of KB per second).

The motherboard I chose is the

Gigabyte GA-P31-ES3G with one x16 connector and three x1 connectors. It has the particularity of being undersized (only 19.3 cm wide) which helped me fit all the hardware in a single rackable chassis.

Designing for 1000+ Watt

Power was of course the other tricky part. Four HD 5970 cross the 1000 Watt mark. I chose to split the load on two (relatively) low-powered, but less expensive power supplies. In order to know how to best spread the load I first had to measure the current drawn by a card under my workload from its three 12 Volt power sources: PCIe connector, 6-pin, and 8-pin power connectors. I used a

clamp meter for this purpose. This is where another advantage of the flexible PCIe extenders becomes apparent: the 12V wires can be physically isolated from the others on the ribbon cable to clamp the meter around them. In my experiments with whitepixel, the current drawn by an HD 5970 is (maximum allowed by PCIe spec in parentheses):

- PCIe connector (idle/load): 1.1 / 3.7 Amp (PCIe max: 6.25)

- 6-pin connector (idle/load): 0.9 / 6.7 Amp (PCIe max: 6.25, the card is slightly over spec)

- 8-pin connector (idle/load): 2.2 / 11.4 Amp (PCIe max: 12.5)

- Total (idle/load): 4.2 / 21.8 Amp (PCIe max: 25.0)

(Power consumption varied by up to +/-3% depending on the card, but it could be due to my clamp meter which is only 5% accurate. The PCIe connector also provides a 3.3V source, but the current draw here is negligible.)

The total wattage for the above numbers, per HD 5970, is 50 / 262 Watt (idle/load) which approximately matches the TDP specified by AMD: 50 / 294 Watt.

As to the rest of the system (motherboard, CPU —the system is diskless), they draw a negligible 30 Watt at all time, about half from the 12V rail (I measured 1.5 Amp) and half from others. It stays the same at idle and under the load imposed by whitepixel because the software does not perform any intensive computation on the processor. That said I planned for 3 Amp as measured with 1 core busy running "sha1sum /dev/zero".

Standardizing Power Distribution to the Video Cards

I decided early on to use server-class PSUs for their reliability and because few desktop PSUs are certified 80 PLUS Silver or better (80 PLUS only just was not enough). One inconvenient of server-class PSUs is that few come with enough PCIe 6-pin or 8-pin power connectors for the video cards. I came up with a workaround for this that brings additional advantages...

The various 12V power connectors in a computer (ATX, EPS12V, ATX12V) are commonly rated up to 6, 7, or 8 Amp per wire. On the other hand the PCIe specification is excessively conservative when rating the 6-pin connector 75 Watt (2.08 Amp/wire, 3 12V wires, 3 GND), and the 8-pin connector 150 Watt (4.17 Amp/wire, 3 12V wires, 5 GND). There is no electrical reason for being so conservative. So I bought a few parts (read

this great resource about computer power cables):

- Molex crimper

- Yellow and black stranded 16AWG wire (large gauge to minimize voltage drop, without being too inconvenient to crimp)

- Molex Mini-fit Jr. 4-circuit male and female housings (the same kind used for 4-pin ATX12V cable and motherboard connectors)

- Molex Mini-fit Jr. terminals (metallic pins for the above connector)

And built two types of custom cables designed for 6.25 Amp per 16AWG wire:

- PCIe 6-pin connector to custom 4-pin connector (with one 12V pin, one GND pin, two missing pins) for 6.25 Amp total

- PCIe 8-pin connector to custom 4-pin connector (with two 12V pins, two GND pins) for 12.5 Amp total

With these cables connected to the video cards, I have at the other end of them a standardized set of 4-pin connectors (with 1 or 2 wire pairs) that remain to be connected to the PSU. I used a

Molex pin extractor to extract the 12V and GND wires from all unused PSU connectors (ATX, ATX12V, EPS12V, etc) which I reinserted in Molex Mini-fit Jr. housings to build as many 4-pin connectors as needed (again with either 1 or 2 wire pairs).

Essentially, this method standardizes power distribution in a computer to 4-pin connectors and at the same time gets rid of the unnecessary 2.08 or 4.17 Amp/wire limit imposed by PCIe. Manufacturing the custom cables is a one-time cost, but I am able, with the Molex pin extractor, to reconfigure any PSU in a minute or so to build as many 4-pin connectors as it safely electrically allows. It is also easy to change from powering 6-pin connectors or 8-pin connectors by reconfiguring the number of wire pairs. Finally, all the cable lengths have been calculated to minimize voltage drop to under 100 mV.

Spreading ~90 Amp @ 12 Volt Across 2 PSUs

As per my power consumption numbers above for a single HD 5970 card, four of them plus the rest of the system total 4 * 21.8 + ~3 = ~90 Amp at 12 Volt. To accommodate this, I used two server-class 560 Watt Supermicro PWS-562-1H (aka Compuware CPS-5611-3A1LF) power supplies rated 80 PLUS Silver with a 12V rail capable of 46.5 Amp. Based on the measurements above, I decided to spread the load as such:

- First PSU to power the four 8-pin connectors:

4 * 11.4 (8-pin) = 45.6 Amp

- Second PSU to power everything else (four 6-pin connectors + four cards via PCIe slot + ATX connectors for mobo/CPU):

4 * 6.7 (6-pin) + 4 * 3.7 (slot) + ~3 (mobo/CPU) = ~45 Amp

With the current spread almost equally between the two PSUs, they operate slightly under 100% of their maximal ratings(!) In a power supply, the electrolytic capacitors are often the components with the shortest life. They are typically rated 10000 hours. So, although it is safe to operate the PSUs at their maximal ratings 24/7, I would expect them to simply wear out after about a year.

During one of my tests, I accidentally booted the machine with the video cards wired in a way that one of the power supplies was operating 10% above its max rating. I started a brute forcing session. One of the PSUs became more noisy than usual. It ran fine for half a minute. Then the machine suddenly shut down. I checked my wiring and realized that it must have been pulling about 51 Amp, so the over current protection kicked in! This is where the quality of server-class PSUs is appreciable... I corrected the wiring and this PSU is still running fine to this day.

Note that any other way of spreading the load would be less efficient. For example if both the 6-pin (75W) and 8-pin (150W) connectors of one card are connected to the same PSU, and if the remaining cards are powered in a way to manage to spread the power equally at full load, then the equilibrium would be lost when this one card stops drawing power (not the others) because one PSU would see a drop of (up to) 225W and the other at best 75W if it was powering the slot. A PSU at very low load is less efficient. When using two PSUs I recommend to have one power the slot and the 6-pin connector, and the other the 8-pin one (150W each).

88% Efficiency at Full Load

4 cards * 262 Watt + 30 Watt for mobo/CPU = 1078 output Watt

However I measured with a

Kill-a-Watt on the wall outlet

1230 input Watt. So the power efficiency is 1078 / 1230 =

88% efficiency, even better than the 80 PLUS Gold level even though the PSU are certified only Silver! This demonstrates another quality of server-class PSUs. This level of efficiency may be possible due to my configuration drawing most of its power from the highly-optimized 12 Volt rails, whereas the official 80 PLUS tests are conducted with a significant fraction of load on the other rails (-12V, 3.3V, 5V, 5VSB) which are known to be less efficient. After all, even

Google got rid of all rails but 12V.

84% Efficiency when Idle

Similarly, at idle I measure a PSU output power of:

4 cards * 50 Watt + 30 Watt for mobo/CPU = 230 output Watt

While the Kill-a-Watt reports 275 input Watt, which suggests an efficiency of 84%. At this level each PSU outputs 115 Watt so they function at 20% of their rated 560 Watt. According to the 80 PLUS Silver certification a PSU must be 85% efficiency at this load. My observation matches this within 1% (slight inaccuracies of the clamp meter).

Rackable Chassis

A less complex design problem was how to pack all this hardware in a chassis. As shown in the picture below, I simply removed the top cover of a 1U chassis (Norco RPC-170), and placed the video cards vertically (no supports were necessary), each spaced by 2 cm or so for a good airflow. Each PCIe extenders is long enough to reach the PCIe connectors on the motherboard —I bought 4 different lengths: 15cm, 20cm, 30cm, 30cm. The two 1U server PSUs are stacked on top of each other. The motherboard is not screwed to the chassis, it simply lays on an insulated mat.

The spacing between the video cards really helps: at an ambient temperature of 25 C, "aticonfig" reports maximum internal temperatures of 60-65 C at idle and 85-90 C under full load.

Final Thoughts

Comparison With Amazon EC2 GPU Instances

Amazon EC2 GPU instances are touted as inexpensive and perfect for brute forcing. Let's examine this.

On-demand Amazon EC2 GPU instances cost

$2.10/hour and have two Nvidia Tesla M2050 cards, which the author of ighashgpu (fastest single hash MD5 brute forcer for Nvidia GPUs) estimates can achieve a total of 2790 Mhash/sec.

One would need more than 10 such instances to match the speed of the 4xHD 5970 computer I built for about $2700. Running 10 of these instances for 6 days or more, would end up with hourly costs totalling $3024 and would already surpass the cost my computer. Running them 30 days would cost $15k. Running them 8 months would cost $123k. Compare this to the operating costs for my computer, mainly power, which are a mere $90 per month (1230 Watt at $0.10/kWhr), or $130 per month assuming an unremarkable

PUE of 1.5 to account for cooling and other overheads:

- Buying and running 4 x HD 5970 for 8 months: 2700 + 8*130 = $3740

- Running 10 Amazon GPU instances for 8 months: 10 * 2.10 * 24 * 30.5 * 8 = $123000

You get the idea: financially, brute forcing in Amazon's EC2 GPU cloud makes no sense, in this example it would cost 33x more over 8 months. I recognize this is an extreme example with 4 highly optimized HD 5970, but the overall conclusion is the same even when comparing EC2 against a more modest computer with slower Nvidia cards.

To be more correct, brute forcing in Amazon's cloud makes financial sense in some cases, for example when performing so infrequently and at such a small scale (less than a few days of computing time) that purchasing 1 GPU would be more expensive. On the opposite side of the scale, it may start to make sense again when operating at a scale so large (hundreds of GPUs) that one may not have the expertise or time to deploy and maintain such an infrastructure on-premise. At this scale, one would buy reservedinstances for a one-time cost plus a hourly cost lower than on-demand instances: $0.75/hr.

One should also keep in mind than when buying EC2 instances, one is paying for hardware features that are useless and unused when brute forcing: full bisection 10 Gbps bandwidth between instances, terabytes of disk space, many CPU cores (irrelevant given the GPUs), etc. The power of Amazon's GPU instances is better realized when running more traditional HPC workloads that utilize these features, as opposed to "dumb" password cracking.

IL Compiler Optimizer

I spent a lot of time looking closely how AMD's IL compiler optimizes and compiles whitepixel's IL code to native ISA instructions at run time. Anyone looking at the output ISA instructions will notice that it is a decent optimizer. For example step 1 in MD5 requires in theory 8 instructions to compute (3 boolean ops, 4 adds, 1 rotate):

A = B + ((A + F(B, C, D) + X[0] + T_0) rotate_left 7)

with F(x,y,z) = (x AND y) OR (NOT(x) AND z)

But because the intermediate hash values A B C D are known at the beginning of step 1, the CAL compiler precomputes "A + F(B, C, D)" which results in only 4 ISA instructions to execute this step. This plus other similar optimizations make the compiler contributes an overall perf improvement of about 3-4%. It might not sound much, but it was certainly sufficiently noticeable that it prompted me to track down where the unexpected 3-4% extra performance came from.

Expected Performance on HD 6900 series

The next-generation dual-GPU HD 6990 to be released in a few months is rumored to have 3840 ALUs at 775 MHz. If this is approximately true, then this card should perform about 9 Bhash/sec, or about 28% faster than the HD 5970.

Hacking is Fun

You may wonder why I spent all this time optimizing cost and power. I like to research, learn, practice these types of electrical and mechanical hacks, and optimize low-level code. I definitely had a lot of fun working on this project. That is all I have to say :-)