Optical Emission Security – Frequently Asked Questions

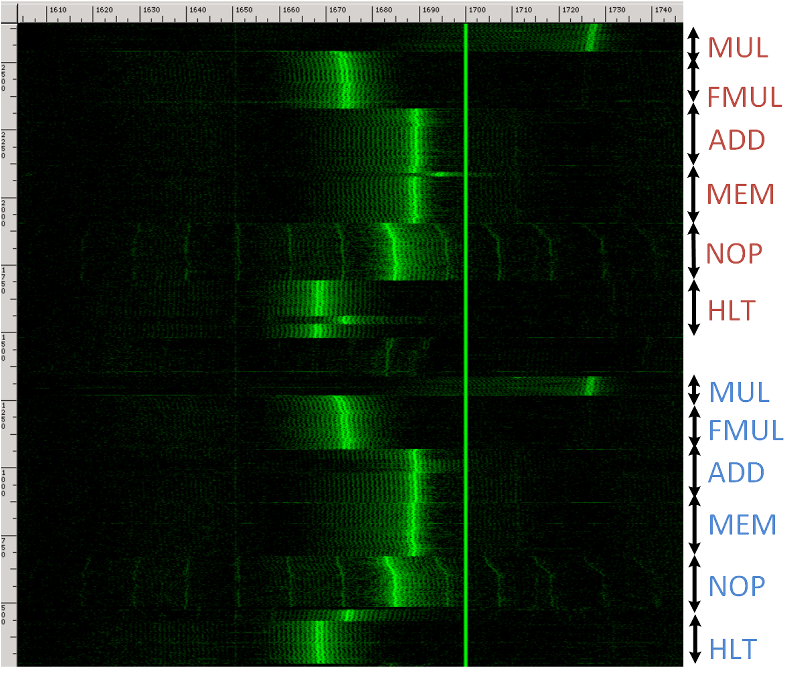

Markus Kuhn In the paperOptical Time-Domain Eavesdropping Risks of CRT Displays,I describe a new eavesdropping technique that reconstructs text on computer screens from diffusely reflected light. This publication resulted in some wide media attention (BBC, New Scientist,Wired, Reuters, Slashdot). Here are answers to some of the questions I have received, along with some introductory information for interested readers looking for a more highlevel summary than the full paper, which was mainly written for an audience of hardware-security and optoelectronics professionals. Q: How does this new eavesdropping technique work? To understand what is going on, you have to recall how a cathode-ray display works. An electron beam scans across the screen surface at enormous speed (tens of kilometers per second) and targets one pixel after another. It targets this way tens or hundreds of millions of pixels every second to convert electron energy into light. Even though each pixel shows an afterglow for longer than the time the electron beam needs to refresh an entire line of pixels, each pixel is much brighter while the e-beam hits it than during the remaining afterglow. My discovery of this very short initial brightness in the light decay curve of a pixel is what makes this eavesdropping technique work. An image is created on the CRT surface by varying the electron beam intensity for each pixel. The room in which the CRT is located is partially illuminated by the pixels. As a result, the light in the room becomes a measure for the electron beam current. In particular, there is a little invisible ultrafast flash each time the electron beam refreshes a bright pixel that is surrounded by dark pixels on its left and right. So if you measure the brightness of a wall in this room with a very fast photosensor, and feed the result in another monitor that receives the exact same synchronization signals for steering its electron beam, you get to see an image like this:

2002 IEEE Symposium on Security and Privacy, Berkeley, California, May 2002.

- The eavesdropped videosignal is periodic over at least a few seconds, therefore periodic averaging over a few hundred frames can help significantly to reduce the noise.

- If you know exactly what font is used, many of the equalization and symbol detection techniques used in modems or pattern recognition applications can be applied to recover the text (remote optical character recognition).

- Optical filters can eliminate other colours from background light.

- A large sensor aperture (telelens, telescope) can improve the photon count.

- Reception is difficult if not impossible from well-lit rooms, in which CRTs do not make a visible contribution to the ambient illumination. Don't work in the dark.

- No not assume that etched or frosted glass surfaces prevent this technique if there is otherwise a direct line of sight to the screen surface.

- This particular eavesdropping technique is not applicable to LCDs and other flat-panel displays that refresh all pixels in a row simultaneously.

- Make sure, nobody can install eavesdropping equipment within a few hundred meters line-of-sight to your window.

- Use a screen saver that removes confidential information from the monitor in your absence.

created 2002-03-05 – last modified 2004-11-29 – http://www.cl.cam.ac.uk/~mgk25/emsec/optical-faq.html